ULOOF : User Level Online Offloading Framework

Mobile devices are equipped with limited processing power and battery charge. With mobile computation offloading, it is possible to provide better user experience in terms of computation time and energy consumption.

Since 2012, we have been working on a mobile computation offloading framework that is lightweight and that can be exploited by users without specific involvemenent of application developers and without requiring to root the mobile device. We named the solution ULOOF (User Level Online Offloading Framework).

ULOOF is equipped with a machine learning logic and a smart decision engine to minimize both execution time and energy consumption of mobile Android applications, while not requiring any modification in underlying device operating system. ULOOF objective is to provide offloading functionality to any existing android application, by inspecting, decompiling and recompiling it.

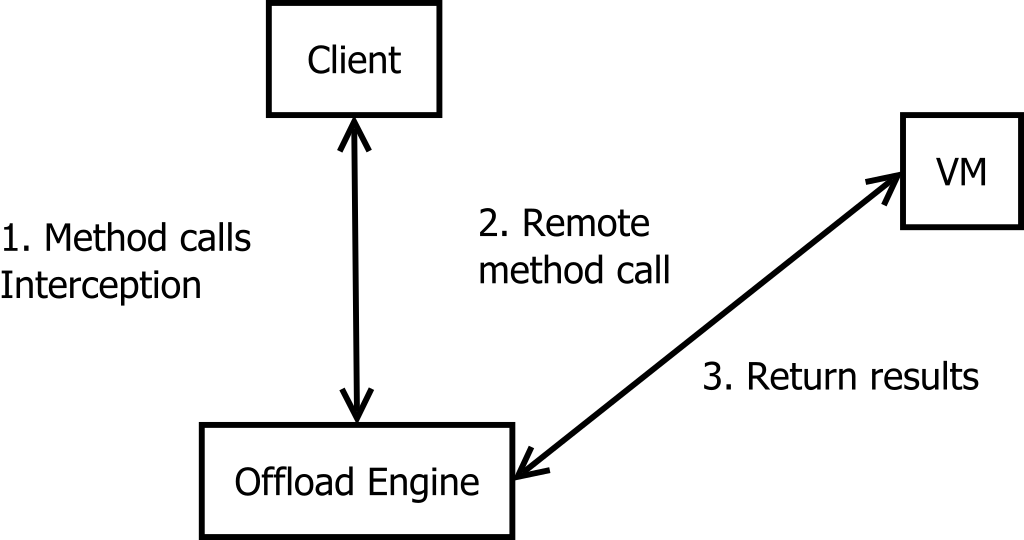

A high-level representation of the framework is in the following figure. A Virtual Machine (VM) emulating a remote Android device is called for the remote execution when a local decision engine, exploiting machine learning on the consumed energy and execution time on a per-method level. Additional post-processing tasks on the primitive APK may be run on the VM rather than on the device. Instead of the VM, another Android device (to support device-to-device computation offloading) or bare-metal machine could also be used.

Additional details are in the related publications below.

Framework Demonstration

The ULOOF framework consists of a smart decision engine which predicts execution time and energy consumption of a mobile computation. The decision engine measures the potential gain in terms of the two aspects and decide whether to offload a certain computation.

To demonstrate our framework, we set up two proof-of-concept android applications:

(1) one for computing Fibonacci numbers;

(2) one for computing shortest routes, corresponding to a navigation application.

To download ULOOFed applications, see below. To test the whole framework, here is the code.

The following video shows how ULOOF works with the Fibonacci application.

Instrumenting an Android Application

Our post-compiler scans an Android mobile application and creates an offloading-enabled application, which is functionally equivalent, but with the capability to offload computations to a remote server. The granularity of the offloading decision is the application method.

This is achieved by a careful automated analysis to detect which methods can be executed on the server consistently and by marginally modifying those methods code to execute them in a remote server.

Autonomous Method Selection

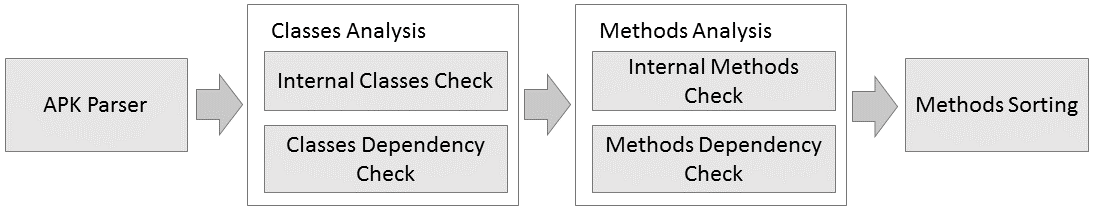

The post-compiler retrieves the information from the application and analyse each class and method found in the application. It detects the structure of application and inspects each class found from the initial structure scan. Classses are so classified into offloadable and non-offloable classes.

The methods in the offloadable classes are further inspected with additional tests to determine whether they are suitable for an offloading, as represented in the following figure.

Method Translation and Optimization

Our post-compiler can optimize every method detected from the previous method selection algorithm into a Jimple pseudocode. The optimized methods can be executed on a remote server at runtime regardless of the type of parameters passed as input arguments and whether the method is static or non-static.

The post-compiler also equipped with a dynamic object management between the mobile application and the remote server. Each time we execute the remote computation, we make sure every instance involved in offloading is kept up-to-date regardless of where the computation is executed.

Publications

Download

The fully-fledged ULOOF framework project is open sourced here .

You can download the demonstration applications that will offload method calls to a VM running at LIP6, based on your network latency with our servers in Paris.

NB: you will need to authorize installing APK not coming from Google play.

People

This work was initiated in the frame of the French-Brazilian CNRS - FAP exchange collaborative project WINDS (Systems for Mobile Cloud Computing), between LIP6/University Pierre and Marie Curie - Sorbonne (UPMC, Paris, France) and Federal University of Minas Gerais (UFMG, Belo Horizonte, Brazil) and the ANR ABCD project. It is then further supported by the FUI PODIUM project. These projects are coordinated and handled by Prof. Rami Langar and Prof. Stefano Secci.

Involved people:

José Leal (UFMG, visiting student at LIP6, now in Google Inc),

Daniel Macedo, José Nogueira (UFMG, visiting professors at LIP6),

Se-young Yu (LIP6, now postdoc at Northwestern University, IL, USA),

Alessandro Zanni (LIP6, previously Ph.D. student at UNIBO),

Paolo Bellavista (UNIBO, visiting professor at LIP6),

Alessio Diamanti (LIP6, graduate student at UNIBO),

Shuai Yu (LIP6, graduate Ph.D. student at UPMC, now postdoc at Sun Yat-Sen University, China),

Boutheina Dab (LIP6, now postdoc at Orange Labs, Chatillon, France),

Rami Langar (UPEM, previously Associate Professor at LIP6),

Stefano Secci (Cnam, previously Associate Professor at LIP6).

If you want to join the team or help, please drop us an e-mail.

Contacts

For any suggestions, comments or special requests do not hesitate to contact us.

rami.langar AT u-pem.fr

LIGM/UPEM, University Paris East Marne-la-Vallee

5 Bld Descartes, Champs-sur-Marne, 77454 Marne-la-Vallee, France

stefano.secci AT lip6.fr

LIP6, University Pierre and Marie Curie

4 Place Jussieu, 75005 Paris, France